ClarkVision.com

| Home | Galleries | Articles | Reviews | Best Gear | Science | New | About | Contact |

The Depth-of-Field Myth and Digital Cameras

by Roger N. Clark

| Home | Galleries | Articles | Reviews | Best Gear | Science | New | About | Contact |

by Roger N. Clark

A commonly cited advantage of smaller digital cameras is their greater depth-of-field. This is incorrect.

Aperture in this article refers to the effective diameter of the lens, or iris diaphragm within the lens (some uses of aperture in photography refers to f/ratio). So if you understand the above paragraph describing the aperture (again, lens diameter not f/ratio) as controlling depth of field, you need not read further. The discussion below was derived from different ways of explaining the effects of the above concept.

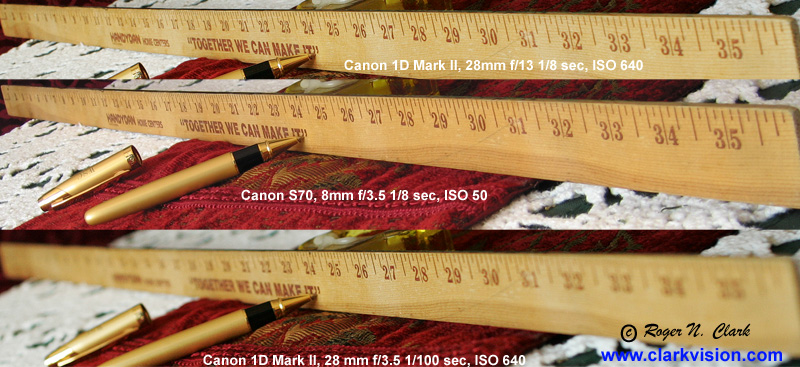

Figure 1. The depth of field from a Canon 1D Mark II camera

is compared to that from a Canon S70. The lens aperture diameter is

2.2 mm in the top image, 2.3 mm in the middle image, and and 8.0 mm

in the bottom image. When the aperture diameters are equal (2.2 and 2.3

mm are close enough), we see the perceived depth of field to be the same.

The Canon 1D mark II camera has a pixel pitch (pixel spacing) of 8.2 microns. The Canon S70 pixel pitch is 2.3 microns. The ratio of the pixel pitches is 8.2/2.3 = 3.56. If we keep the f/ratio constant between the two cameras (see Figure 1, bottom two frames, images at f/3.5), the larger pixel camera has a much smaller depth of field. But if we equalize the apertures, and and exposure times (1/8 second, top two frames in Figure 1), the two cameras record the same depth of field and collect the same number of photons in the pixels in each camera. To make the depth of fields equal, the focal lengths have the same ratio as the ratio of the pixel pitch, and the f/stop changes by 3.7 stops (f/3.5 on the small camera to f/13 on the large camera: f/3.5 * 3.56 = f/12.5). The ISO on the large pixel camera was increased by the same factor as the reduction in light due to the small aperture to give the same relative intensities in the image (ISO 50 * 3.56 * 3.56 = 634, close to the nearest available ISO of 640). Note: changing ISO does not change the number of photons recorded, only how the signal is amplified.

Figure 1 illustrates another property of large versus small cameras: the larger camera has a smaller depth-of-field when at the same f/ratio as the small camera. The small sensor camera has a subset of the depth-of-field range of the larger camera.

Depth-of-field is the property of near and far objects in the field of view of a lens being in acceptably sharp focus. What is acceptably sharp is a definition called the circle of confusion. The circle of confusion for depth-of-field calculations is defined for the final print or final displayed image. For example, it might be defined as 1/4 mm on an 8x10-inch print. This means that the near and far points on the printed image produce out-of-focus blur no larger than 1/4 mm.

The amount of blur due to being out of focus, the circle of confusion, is dependent on the diameter of the lens and its focal length. Different objects focus at different distances behind a lens, with objects at great distances (like the moon and stars) focusing closest to the lens. Closer things in the field of view, like a flower in the foreground focus at a greater distance behind the lens. The longer the focal length, the greater the change in the focused image from near and far things in the field of view of the camera. The greater the lens diameter, the greater the blur caused by the focus shift.

The f/ratio of the lens is the lens focal length (the focal length of objects at infinity) divided by the lens diameter. The bottom line after some complex calculations shows that the depth of field is proportional to the f/ratio squared. In other words, an f/4 lens has 1/4 the depth-of-field of an f/8 lens ([4/8]*[4/8]=1/4). (See references at the end of this article.)

This f/ratio squared property is where the depth-of-field myth gets its origins. Small Point and Shoot (P&S) digital cameras use smaller sensors and shorter focal length lenses, than for example, large-sensor Digital Single Lens Reflex (DSLR) cameras. Let's examine two real cameras.

The Sensor A / Sensor B focal plane image size ratio averages 8.2/2.2 = 3.7x (the horizontal and vertical dimensions are slightly different so we'll use the pixel spacing as the average). For the same approximate field of view, Sensor B uses lenses 3.7 times shorter in focal length. So some might think, that given an f/4 lens on both cameras, Sensor B has 3.7 squared = 13.7 times greater depth-of-field. (The factor 3.7 is also called the crop factor or the lens multiplier factor.) But such a conclusion is incorrect. There are two major reasons.

The first reason the crop factor squared improvement in depth-of-field is incorrect is due to magnification. The depth-of-field is defined for the final image and to fairly compare the images from the two cameras, the images should be enlarged to the same size. Let's use 8x12 inches (203 x 305 mm; we will ignore the small form factor difference). The image from sensor A needs to be enlarged about 10.6x and the small Sensor B about 38x. Because the small sized sensor must be enlarged more, and the blur due to focus is magnified more. That magnification equals the crop factor discussed above. This changes the advantage of the small camera from the crop factor squared (3.7 *3.7 in our example) to just the crop factor, or 3.7 times better depth-of-field. But there is another thing to consider.

The second property to consider in the depth-of-field comparison is the total quality of the final image being produced. The larger sensor camera collects more photons per pixel per unit time (exposure). The total photons collected is related to the diameter of the lens. An f/4 lens on both our example cameras, Sensor A and Sensor B, deliver different amounts of photons to a pixel. The larger camera delivers more photons. In out example, Sensor B delivers 3.7*3.7 = 13.7 more photons per pixel. For more on this subject see: The f/ratio Myth and Digital Cameras. So the large camera is producing a much higher signal-to-noise ratio image than the small camera. Let's make the number of photons the same for the two cameras so the image quality is the same in the final print. You can do that by decreasing the aperture by the crop factor. In our case, a 3.7x reduction in aperture results in a decrease of 3.7*3.7 = 13.7x in the number of photons delivered to each pixel. If the small camera, Sensor B was at f/4, then the large camera would be at 4*3.7 = f/15. But now you say the exposure time would be longer. But the number of photons collected by the two cameras are the same. The exposures by the two cameras would be the same by simply boosting the ISO on the large camera. Boosting the ISO does not change the number of photons collected, nor the sensitivity of the camera's sensor; it only changes how the signal from each pixel is digitized. If the small camera was at ISO 50, then setting the ISO on the large camera, Sensor A in our example, to ISO 800, the signal-to-noise ratio of the images from the two cameras would be the same assuming the same quantum efficiency and read noise from the devices in the two cameras. In practice, the measured read noise of DSLRs tends to be smaller than the lower cost P&S cameras, so the signal-to-noise ratios of the image data would appear slightly better in the shadows in the large camera compared to the small one, and be essentially equal in the highlights.

Discussion: How Can You Predict the Noise Performance?

All decent digital cameras are photon noise limited for any signal above a few hundred of photons and read noise limited for less than a few tens of photons, for reasonable exposures of seconds and less. For long exposures into minutes, thermal noise adds to the equation. Every DSLR tested by myself and several others behaves this way, and so do the tested P&S cameras. Because the cameras have become so good (basically the last approximately 5 years), we can now understand the basic performance of systems and accurately predict what they can do.

Here is an explanation and test data for a DSLR that shows the Canon 1D Mark II DSLR, used in the example above, is photon noise limited: Procedures for Evaluating Digital Camera Sensor Noise, Dynamic Range, and Full Well Capacities; Canon 1D Mark II Analysis http://clarkvision.com/articles/evaluation-1d2 .

You can also estimate quantum efficiency and count photons with digital cameras: Digital Cameras: Counting Photons, Photometry, and Quantum Efficiency http://clarkvision.com/articles/digital.photons.and.qe .

Table 1-3 at this page shows derived noise characteristics for photon-noise limited cameras: The Signal-to-Noise of Digital Camera images and Comparison to Film http://clarkvision.com/articles/digital.signal.to.noise .

So, the bottom line is that photon noise limited cameras have predictable performance for normal photography (outdoor, even indoor lower light conditions). Photon noise limited performance is the best possible, and it is because cameras are performing at this impressive level that allows us to explore performance issues, like depth of field in ways never possible with film cameras.

Discussion: But Depth of Field is a Spatial Detail Issue, not a Photon Noise Issue

Actually, photon noise is a spatial issue too. In order to collect more photons, one needs a larger pixel. An analogy is to collect rain drops in a rain storm. You can collect more drops with a larger bucket, or you can collect the same number of drops in the larger bucket as with a smaller bucket, but the larger bucket needs less time. Same with pixels in a digital camera.

So to make the test of producing the same quality image, with the same depth of field and the same signal-to-noise ratio, one must consider changing aperture to compensate for the increased numbers of photons that a camera with larger pixels collects. When that is done, again, due to spatial sizes of pixels affecting depth of field, we find that depth of field for constant image quality does not change with the size of the camera (with the corresponding size of the pixels).

Discussion: But Small Cameras Seem to Show Images with a Larger Depth of Field

Figure 1, bottom two frames illustrate this property of a larger depth with a smaller camera. It is true that images from smaller cameras tend to show larger depth of field than large DSLR cameras, but that is because people do not understand the issues discussed on this page. If one understands the issues, then one can equalize the image quality and the two cameras would produce the same depth of field. Some photographers may not need the increased depth of field in an image and would not want their large pixel DSLR image to have the lower signal-to-noise ratios that a smaller camera delivers. The photographer with the DSLR has more choices to control the final image quality, but one must understand the complexities if one wants to take advantage of the range of choices.

Another issue is that if the small camera is used at a lower aperture, like f/5.6, then the DSLR's lens may not stop down far enough to get an equal depth of field. That may be true but that is a lens limitation not a fundamental physics issue. It does indicate that there are practical limits to how far this equalization can be taken in practice with commercial equipment.

With all this discussion about larger pixel DSLRs making better images than small pixel P&S cameras, P&S cameras still have an important place. You can still take great pictures with a P&S camera. They are smaller, lighter and can be taken places more easily. But there are more conditions where a DSLR can do better, if you are willing to pay the higher price of the camera and lug the camera around with its greater bulk and weight.

Discussion: If my main interest is large DOF photography (I often shoot at ~F13 with a full-frame camera, even with tilt-shift lenses), and noise is not an issue, is it correct that softening due to diffraction will be sensor size invariant for equal number of pixels, angle of view, and DOF?

If the lens aperture diameter is the same for all sensor sizes, then DOF excluding diffraction will be the same regardless of sensor size. Because as we shrink sensor size, the focal length must be decreased to give the same field of view, the f-ratio is getting faster, so the diffraction spot is also getting smaller. Thus, the diffraction softening will be sensor size invariant for constant aperture diameter.

Discussion: At apertures like f/13, where diffraction becomes more significant, do the most recent high resolution sensors with 50MP, or even 100MP, still provide more detail than sensors with say 36MP or less? Does this effectively become a SNR issue (when trying to undo diffraction losses through sharpening) because high frequency contrast is reduced, and if so, does that mean there is a sensor size advantage after all in terms of preserving details in this case?

The Canon 5DS and 5DSr 50 megapixel cameras have 4.09 micron pixels, basically a scaled up Canon 7D2. At f/13 0% MTF is around 10 microns, so such high resolution would not resolve close lines any more than a a sensor with larger pixels. But small detail will be better defined and the images will look sharper. You can see that in the images in Figure 9 here, which shows 6.25 micron pixels against 4.09 micron pixels. The lens was wide open, f/1.4 so the star images are worse from aberrations than diffraction at f/13, and there is a slight improvement in detail with the smaller pixels. Pixels are sampling an image from the lens, and if you look at this article on sampling, to digitize a diagonal line as a smooth line, or a spot as a round spot, sampling must be on the order of 6 times the diffraction spot diameter or better. At f/13 with a diffraction spot diameter of about 17 microns, you would see image quality improvement down to about 3 micron pixels.

Note too that with larger pixels a blur filter is needed over the sensor, but not with smaller pixels, so that helps the smaller pixels see more detail.

Discussion: Another Way to Look at the Problem

Some people have had difficulty understanding the concepts, so here is another way of looking at the problem. Let's start from the beginning and work through things one step at a time. First rule: don't jump ahead to conclusions.

Question. Given two cameras A and B: Camera B is a perfectly scaled up version of camera A. Thus, the sensor in camera B is twice as large as the sensor in camera A. Both cameras have the same number of pixels, such that the pixels in cameras B are twice the linear size (4 times the area) of camera A. Each camera has an f/2 lens and exposes a scene with constant lighting at f/2. The lens on camera B is twice the focal length as camera A so that both cameras record the same field of view. Let's say that camera A collects 10,000 photons in each pixel in a 1/100 second exposure at f/2.

How many photons are collected in each pixel in camera B in the same exposure time on that same scene with the same lighting at f/2?

Answer: 4 * 10,000 = 40,000 photos per pixel. The number of photons collected scales as the area of the pixel. The area of a pixel in camera B is 4 times that of camera A, so the answer is 4 * 10,000.

Question. Now that we have established that the two cameras have identical fields of view, just that one camera is twice size of the other. Their spatial resolution on the subject is identical (e.g. pixel per picture height and the fine details appear the same). Now let's say we are taking a picture of a flat wall so there are no depth of field issues. The images have the same pixel count, the same field of view, and the same spatial resolution taken with the same exposure time and f/stop.

Are the pictures from the two cameras the same?

Answer: No. They are the same except camera B has collected 4 times the photons. The images from both cameras are absolutely identical in every respect except that the image from camera B, the larger camera, has higher a signal-to-noise ratio (2x higher).

An analogy to collecting photons in a camera with pixels is like collecting rain drops in buckets, assuming the drops are falling randomly, which is probably the way it is. Larger buckets collect more rain drops. If you measure the number of drops collected from a bunch of buckets, you will find that the amount in each bucket is slightly different.

The noise in the counted raindrops collected in any one container is the square root of the number of rain drops. This is called Poisson statistics (e.g. check wikipedia). So if you double the count (photons in a pixel or rain drops in a bucket), the noise in the count will go up by square root 2. For example, put out 10 buckets and you would find the level in the buckets that on average collected 10,000 rain drops, would vary in the measured amount of water by 1% from bucket to bucket (the standard deviation):

square root (10,000)/10,000 = 0.01 = 1%

(10,000 rain drops is about 0.5 liter of water by the way.)

So with Poisson statistics, which is the best that can be done measuring a signal based on random arrival times (e.g.of photons), the signal / noise = signal / square root(signal) = square root(signal).

So in our camera test, collecting 4x the photons increases signal-to-noise ratio by square root (4) = 2.

Fortunately, most digital cameras have such noise characteristics except at near zero signal. This means that improving noise performance can only come through increasing the photon count. That can be done 3 ways: increasing quantum efficiency (currently digital cameras are around 30%), fill factor (most are probably already above 80%), or increase the pixel size (e.g. the larger bucket collects more rain drops).

Question. Assuming there is no change in aberrations if you change f/stop, what could be done to the above test images to make camera B produce an image that is completely identical to that from camera A?

A: Assume the subject is static; no movement, so there are two answers; extra credit for giving both.

B: Assume the subject is not static, then there is one answer. What is it?

Answer A: We have 4x the photons, so the two answers are:

(OPTIONAL: Increase the ISO): While changing ISO changes the perceived image, it does not change the number of photons collected.

Answer B: The one and only answer is stop the lens down two stops. This reduces the photon count and also happens to make the depth of field the same as the smaller sensor camera, finally making the results from the two cameras identical (total photons per pixel as well as depth of field). Changing the ISO higher 2 stops would bring the digitized signal to the same relative level as the small camera, but that could also be done in post processing (again the photon count and signal-to-noise ratio would be the same). The ISO change would also make the metering the same as the small camera, then the metered shutter speeds would be identical too.

In real cameras, boosting the ISO increase is a good step as it reduces A/D quantization and reduces the read noise contribution to the signal.

So, what was the result of the exercise? In making the images from two different sized cameras identical in terms of resolution, angular coverage, exposure time, and signal-to-noise ratio, we find the final property: the depth of field is also identical.

Conclusions

Given the identical photon noise, exposure time, enlargement size, and number of pixels giving the same spatial resolution (i.e. the same total image quality), digital cameras with different sized sensors will produce images with identical depths-of-field. (This assumes similar relative performance in the camera's electronics, blur filters, and lenses.) The larger format camera will use a higher f/ratio and an ISO equal to the ratio of the sensor sizes to achieve that equality. If the scene is static enough that a longer exposure time can be used, then the larger format camera will produce the same depth-of-field images as the smaller format camera, but will collect more photons and produce higher signal-to-noise images. Another way to look at the problem, is the larger format camera could use an even smaller aperture and a longer exposure to achieve a similar signal-to-noise ratio image with greater depth of field than a smaller format camera. Thus, the larger format camera has the advantage for producing equal or better images with equal or better depth-of-field as smaller format cameras.

Additional Reading

Digital Cameras: Does Pixel Size Matter? Factors in Choosing a Digital Camera Good digital cameras are photon noise limited. This sets basic properties of sensor performance.

The f/ratio Myth and Digital Cameras.

http://en.wikipedia.org/wiki/Depth_of_field

DOFMaster - Depth of Field Calculators http://www.dofmaster.com/dofjs.html

Excellent discussion on Depth of Field http://hannemyr.com/photo/crop.html#dof

| Home | Galleries | Articles | Reviews | Best Gear | Science | New | About | Contact |

http://clarkvision.com/articles/dof_myth

First Published August 18, 2006

Last updated March 18, 2017.