Image Processing:

Zeros are Valid Image Data

(Watch out for the Clipping Police and When to Ignore Them)

by Roger N. Clark

Maximizing image contrast means using the full range

of image data, including the bottom end (zeros) and

the maximum (255 in 8-bit data, 65535 in 16-bit data)

Having zeros in your image data is not a sin, contrary

to some internet trolls.

The Night Photography Series:

All images, text and data on this site are copyrighted.

They may not be used except by written permission from Roger N. Clark.

All rights reserved.

If you find the information on this site useful,

please support Clarkvision and make a donation (link below).

Introduction

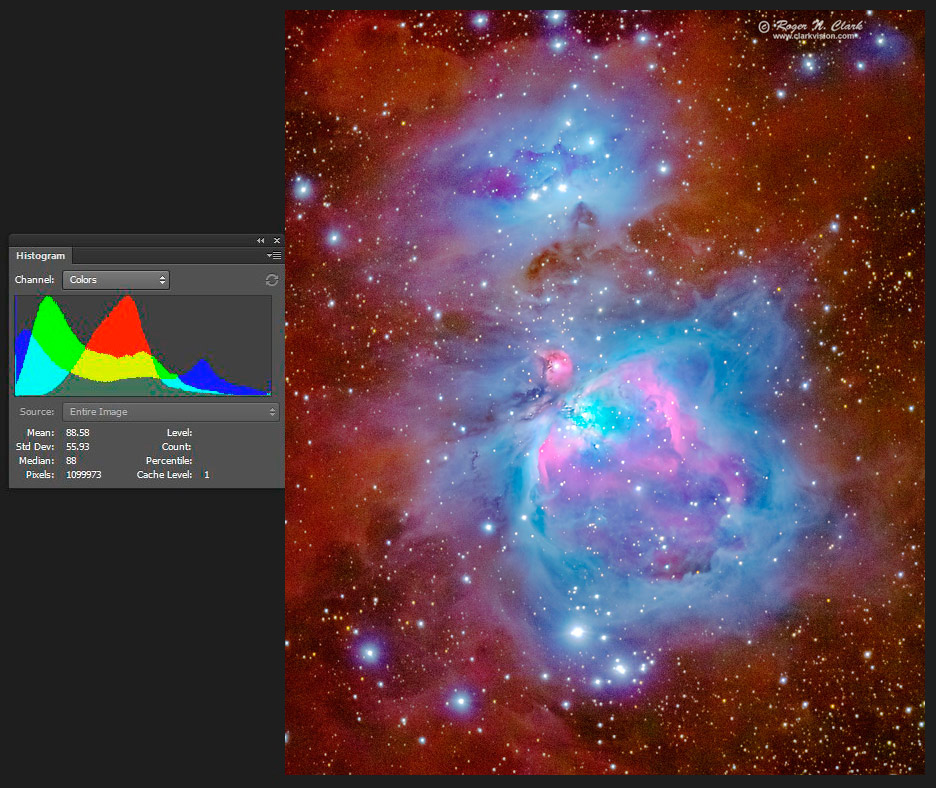

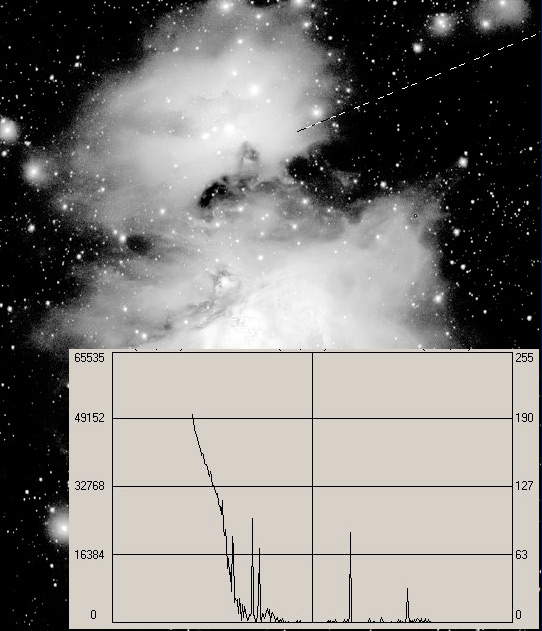

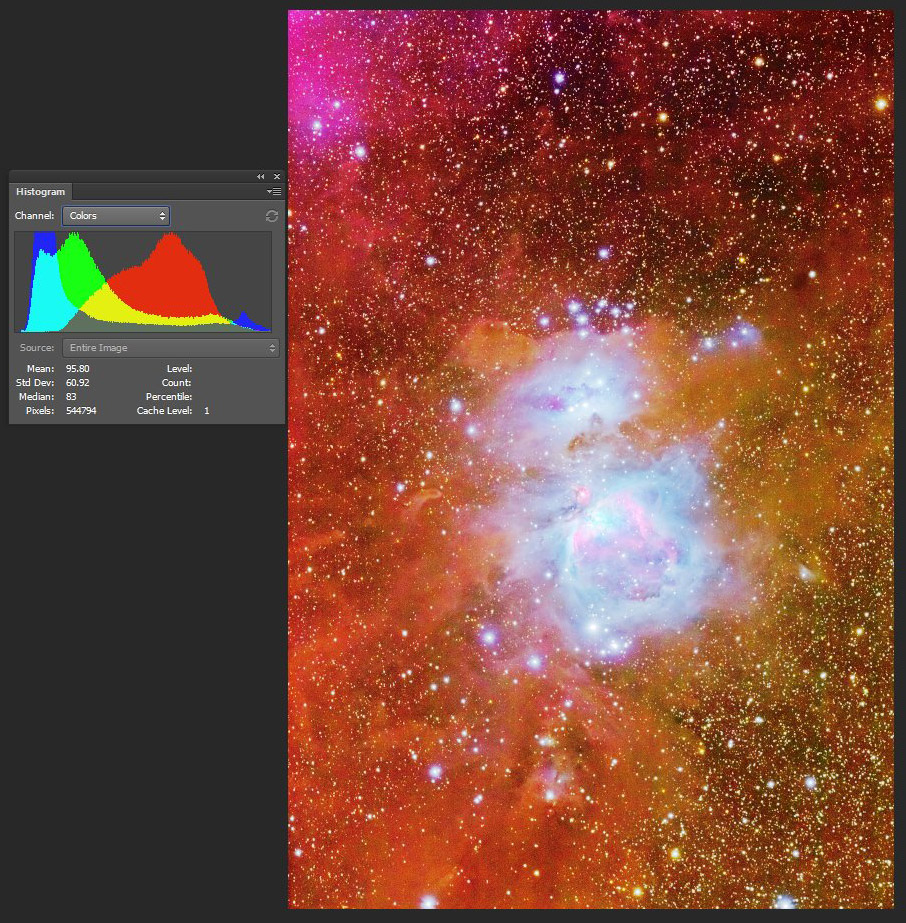

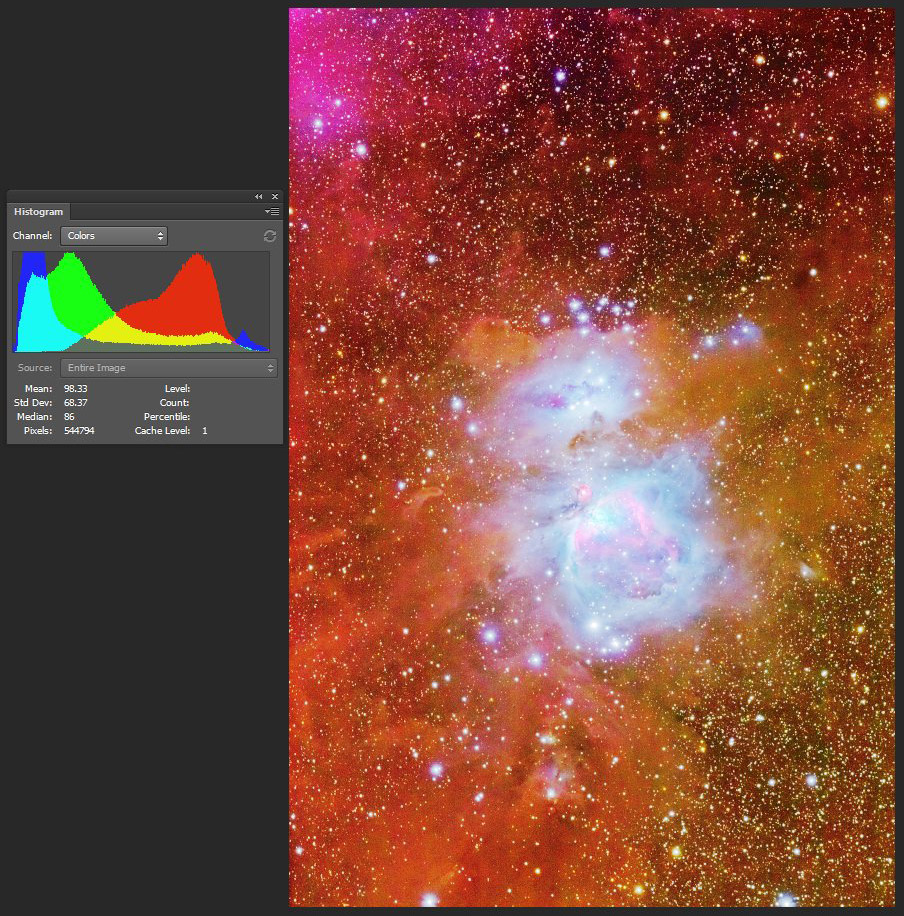

When I posted the image in Figure 1, a member of the internet clipping

police started launching an attack. He complained that the data were

clipped and that was bad. See the blue and cyan lines on the left side

of the histogram. But zeros are valid image data. The problem here is

the internet clipping troll looked at the histogram and just because of

a number of zero values and declared that is bad. But is it?

One needs to understand the context of the data values to know if any

important image information has been lost.

Figure 1. The Great Orion Nebula, Messier 42, Messier 43.

Canon 7D Mark II 20-megapixel digital camera

and

300 mm f/2.8 L IS II

at f/2.8. Twenty seven 61-second exposures at ISO 1600

were added (27.5 minutes total exposure) for the main image, then for

the brighter core of the nebula, 4 32-second, 4 10-second, 8 4-second,

and 6 2-second exposures. The short exposures were made into a high

dynamic range image so that the core of the nebula was not overexposed.

Total exposure time, including the core: 31 minutes. No dark frame

subtraction, no flat fields. Tracking with an astrotrac. The outer

orange nebulosity is very faint, so this image demonstrates that the 7D

Mark II is an amazing low light camera for it to record so much detail

in this short exposure. Full size image is at 2.8 arc-seconds per pixel,

and the image here is a zoomed in crop to 3.2 megapixels, so 2 times lower than full

resolution (5.6 arc-seconds per pixel).

To understand the significance of this image, the orange dust is extremely faint, amounting to

detection of just a few photons. The faintest parts of the image received on average a little

less than 1 photon per pixel per 61-second integration. The brightest parts of the image received

over 2200 photons per pixel per exposure. Combined with the short exposures, the image represents

a dynamic range of over 14 stops. That range needs to be compressed so that the intensity range

can be seen. In my processing, I work with 16-bit data in Adobe RGB color space. (Note, Photoshop

has 15-bits/channel.) For web presentation,

the data need to be converted to 8-bit sRGB color space. Certainly something is lost in that compression.

The article will show some of that loss.

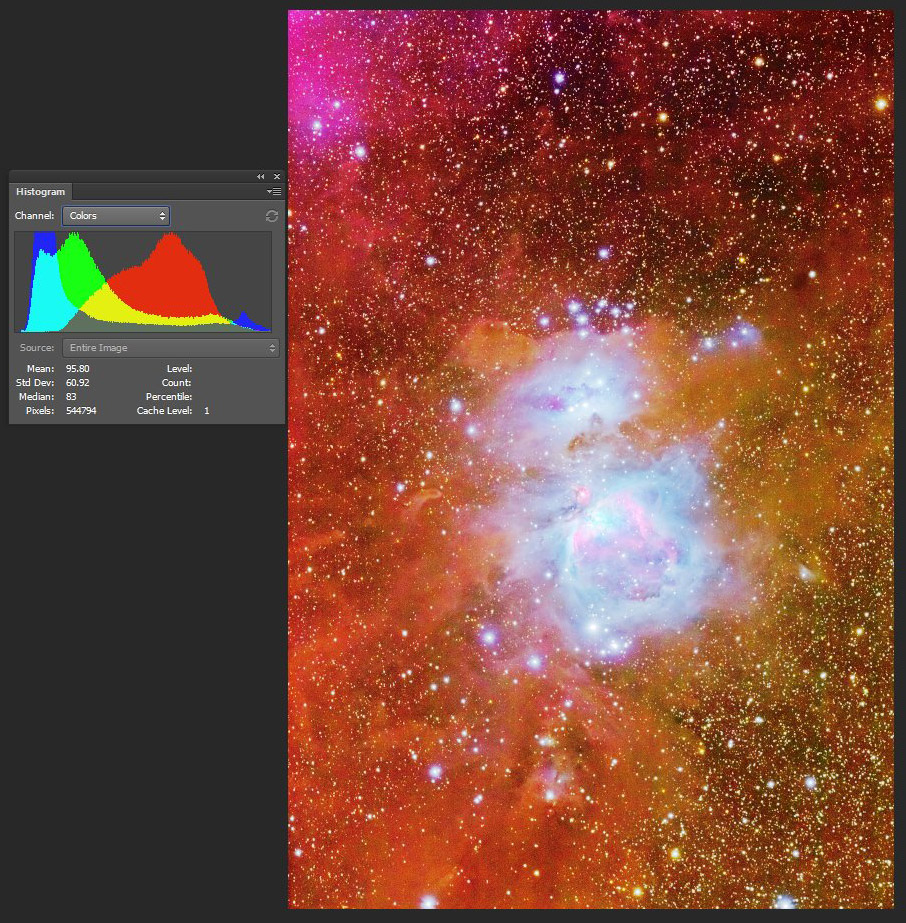

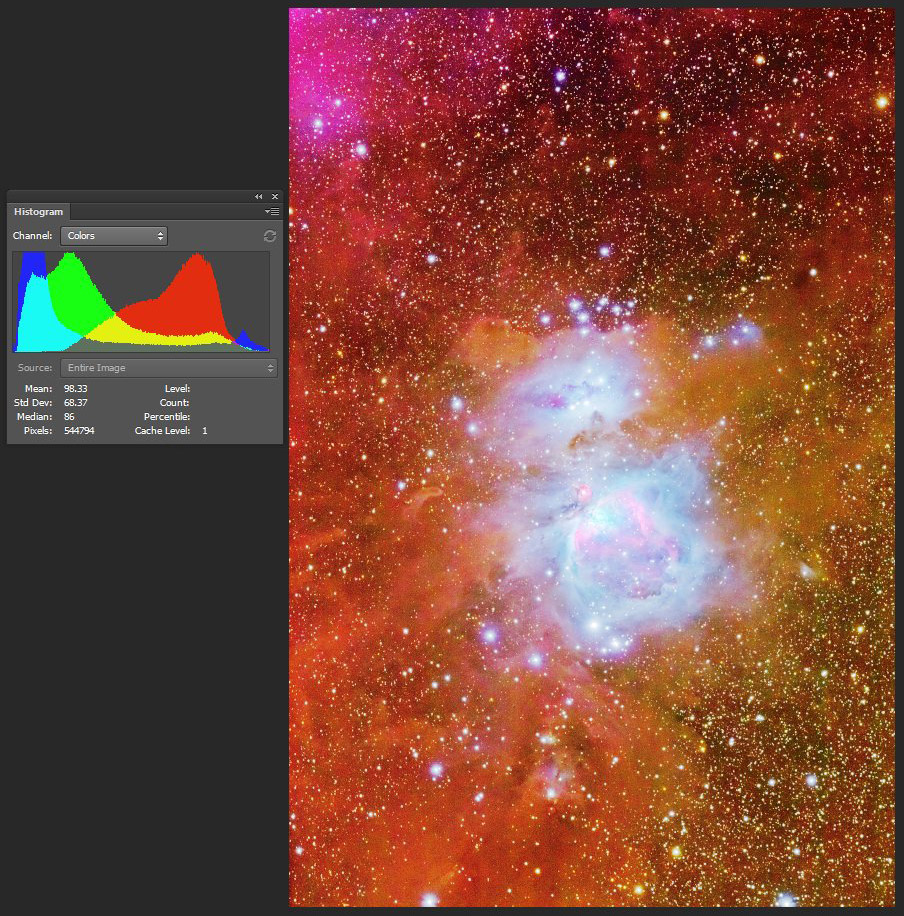

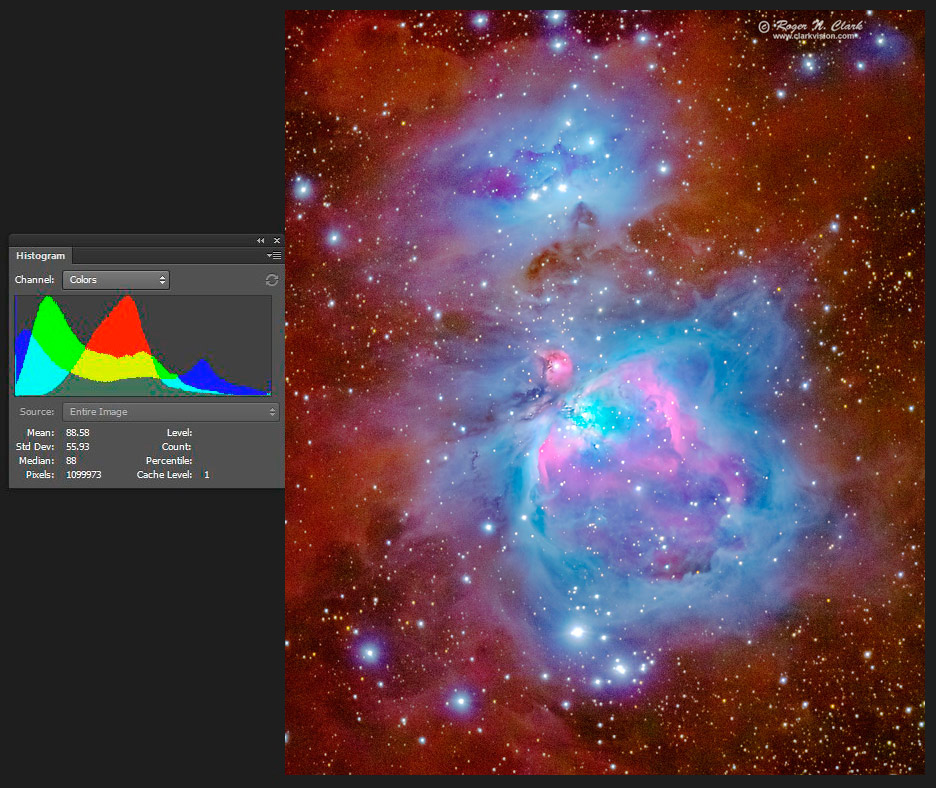

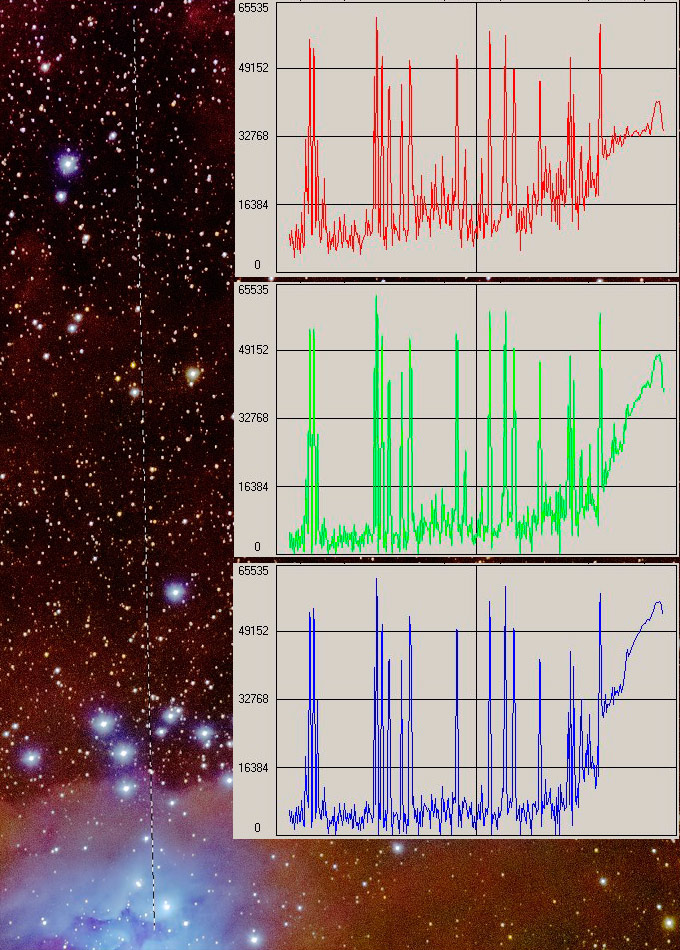

OK, so there are some zeros in the web jpeg file. Is that bad? Let's first look at the

full image: the full frame (Figure 1 is a crop), and the 16-bit data. Because it is hard to show

16-bit in an 8-bit web image, I stretched the 16-bit Adobe RGB tif file and show the result with

the histogram in Figure 2.

Figure 2. The histogram for the 3 color channels (right) is shown for the 16-bit Adobe RGB data that were first stretched

so one can see the low end details. In this data, we see there are no pixels in the histogram on the left

edge within the range that would show in the plot, are certainly not more than a very small number.

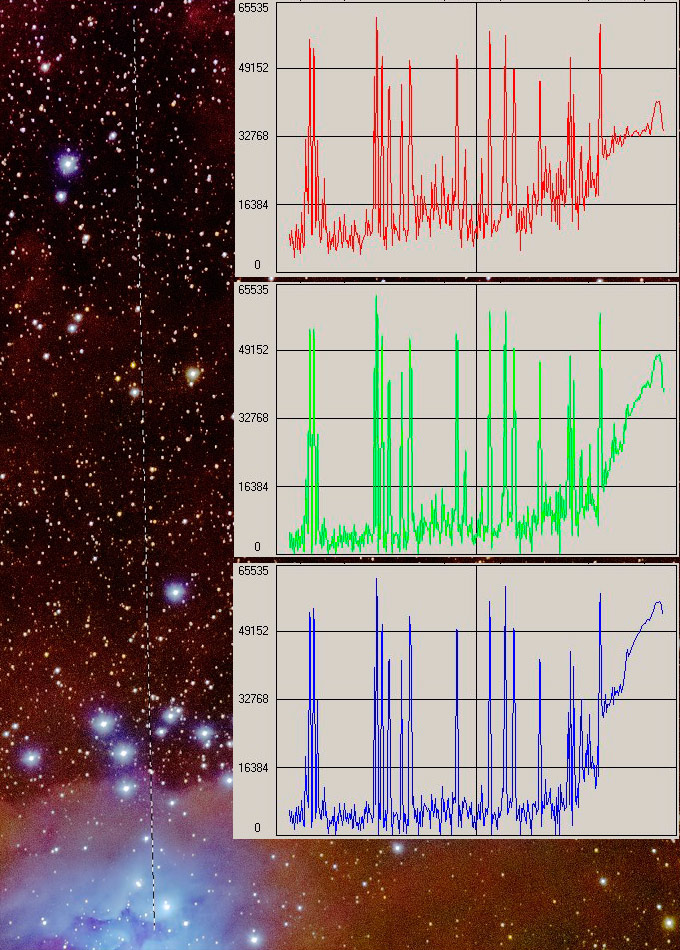

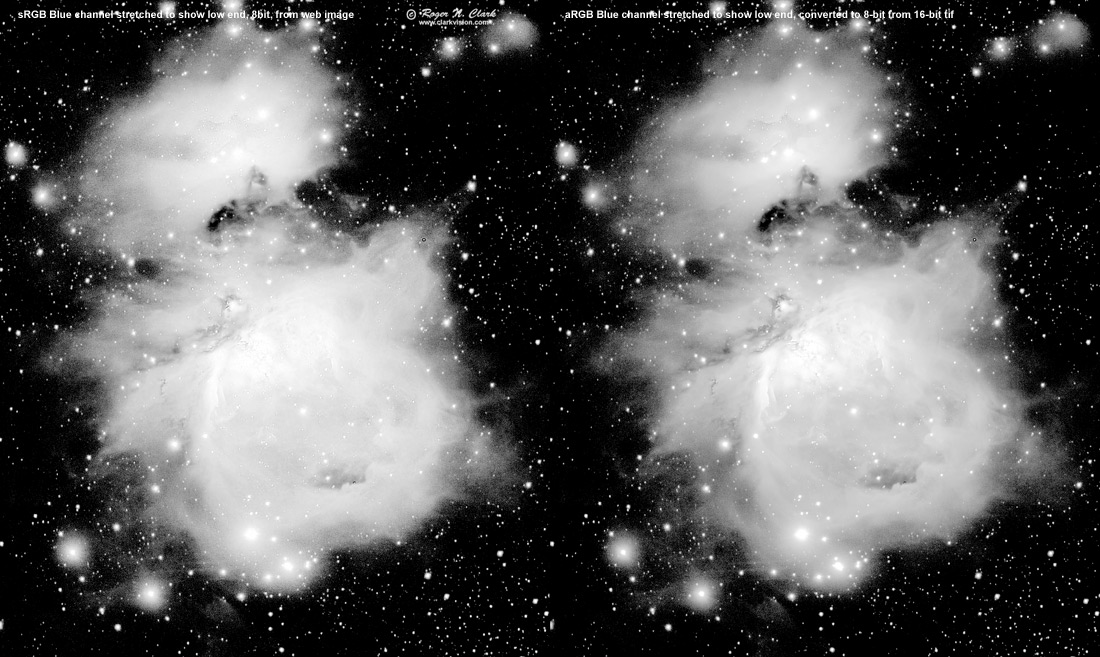

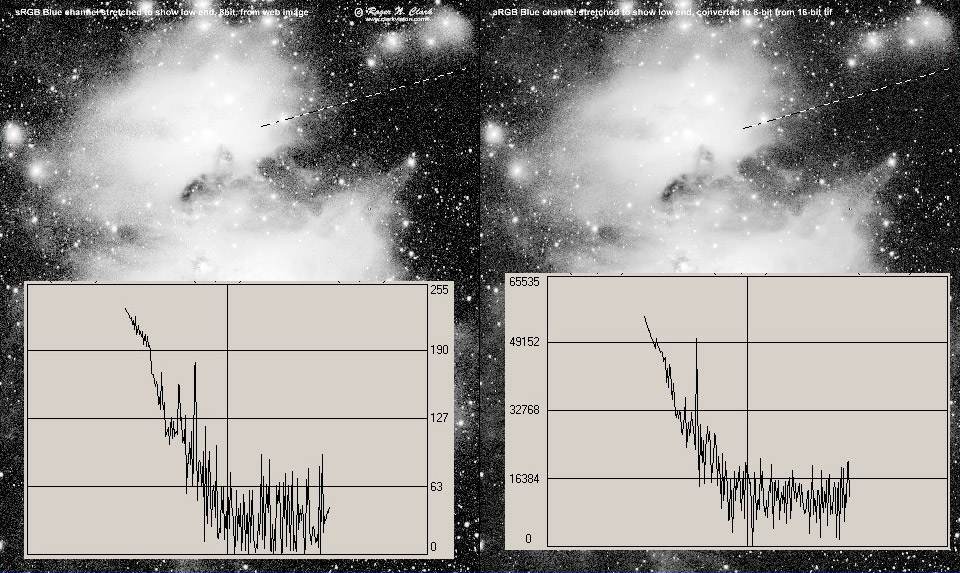

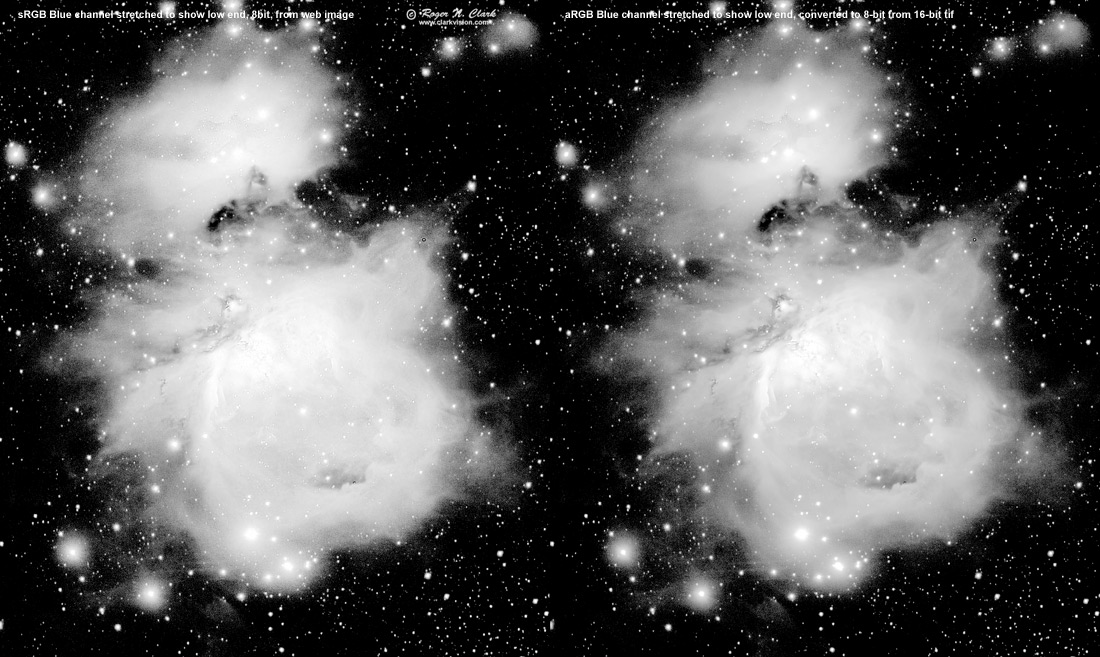

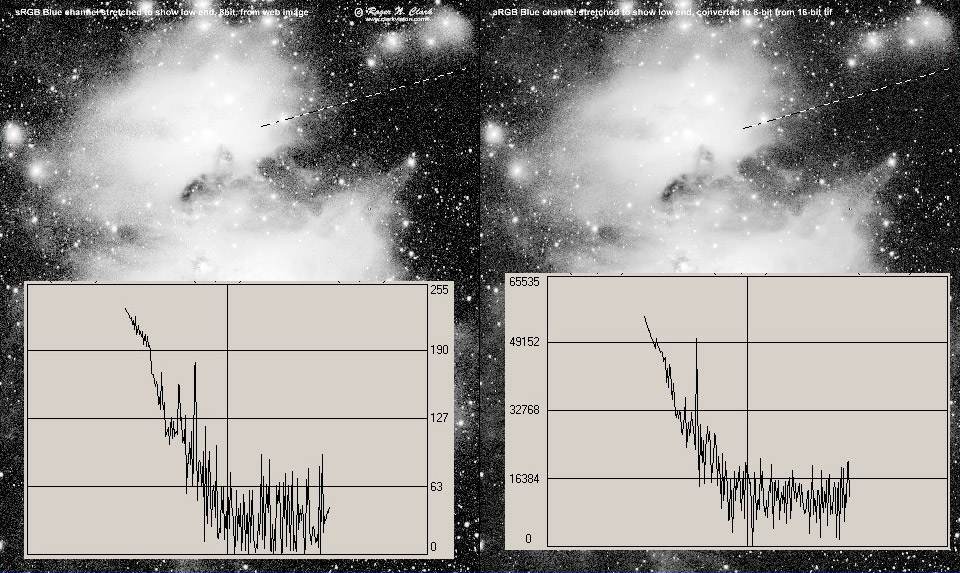

The internet clipping troll complained most about the blue channel, so the blue channel is shown in Figure 3,

stretched again to show the faint end. Both the 8-bit and 16-bit (before converting for the web) are shown.

The results show that the 16-bit data have much less noise, but both images have nice smoothly varying low end.

There are not any large contiguous areas that are clipped to zero. So what is "clipped?"

Figure 3. Blue channel after stretching to show the low end. Left is from the online web image.

On the right is the 16-bit data similarly stretched, then converted to 8-bit for this web presentation.

Compare the two images: There are two key factors: 1) the 16-bit image shows less noise, and 2) there are no

large areas that are completely black.

The high res version:

is here.

So is there a clipping problem, and if so how bad is it? To understand

the data, one needs to look at some intensity traverses. Figures 4

and 5 show two intensity traverses. Figure 4 also shows an indicator of

how extremely faint this image records: note the bar that indicates the

intensity range of one photon (note that only applies to the low end,

it is a non-linear intensity scale).

Figure 4. The red, green, and blue traverses for a part of the image. The traverses show no

clipped pixels in the red or green channels. The blue channel shows some pixels reach near the bottom

of the intensity range, but no pixels actually go to zero. These lower pixels get clipped when

converted to 8-bit sRGB, discussed below. Note the approximately 1-photon I-bar. That represents that

intensity range of one photon at the low end of the intensity scale. Higher up, the I-bar decreases

in size because the intensity scale is not linear. At the low intensity end, the fainter parts of the orange dust,

only a few blue photons were detected, a few more green, with the most photons in the red channel.

The large upward spikes are stars

Figure 5. Red, green, blue intensity traverses that includes some of the darkest parts of the image.

No red pixels, and only a few green and blue pixels reach to near the zero level. As in Figure 4,

the noise envelope near the bottom of the intensity range represents only a few photons.

The large upward spikes are stars

It should be clear by now that the few low pixels represent the extreme random noise fluctuations,

and not some endemic problem with the image processing as charged by the clipping trolls.

One of course could raise the level a little so that even these outliers do not get close to the

zero level, but that reduces contrast in the faintest parts of the image.

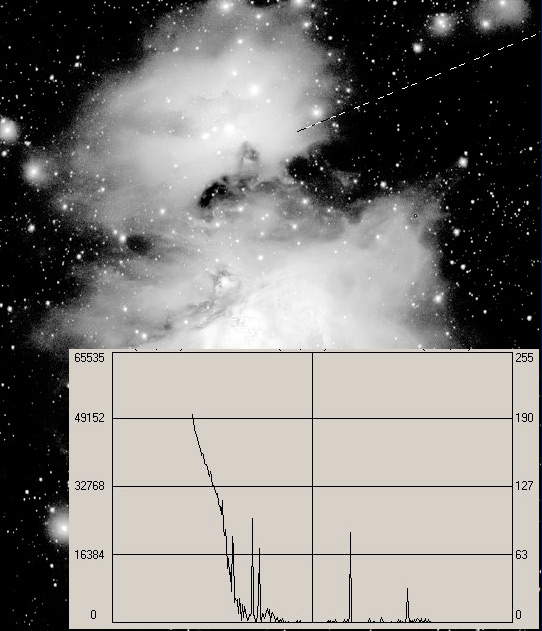

What does serious clipping look like? The image in Figure 6 shows an example, and a traverse is shown

in Figure 7. The traverse shows a lot of the data falls below zero. That is extreme clipping.

Figure 6. Example of serious clipping in an image.

Figure 7. Traverse of the clipped image showing that significant portions of the data

fall below zero.

The Adobe RGB 16-bits/channel Conversion to 8-bits/channel sRGB

The stretched 16-bits/channel Adobe RGB image that was used to produce the image in Figure 2 was converted to

sRGB (Figure 8), still at 16-bits/channel to show the change in the histogram (the image was further converted to

8-bit for this image, but the histogram is of the 16-bit data, as is the histogram in Figure 2. Note the change

in the histogram: the histogram profile has moved closer to the left and right ends of the plot. This

is because the sRGB color space is smaller than the Adobe RGB color space. This means that the extremes

in the Adobe RGB data will be clipped. Most color managed image editors will try to make that change

with as little perceptual change as possible, but some loss is certainly inevitable. The conversion to

8-bits/channel results in more loss. The sum of the two losses and the changes that appear are perhaps better

illustrated by the traverses shown in Figure 9.

Figure 8. The image from Figure 2 (Adobe RGB) is here shown after conversion to sRGB. Note the change

in the histogram whose ends move closer to the left and right axes, potentially clipping some data.

Figure 9. Traverses of the blue channel for the image in Figure 8

(right, sRGB 8-bit data), and Figure 2, (left, Adobe RGB 16-bit data).

Note the 8-bit sRGB data are noisier and more pixels get clipped.

The result of the 16 bit Adobe RGB to 8-bit sRGB image does increase noise,

and clips more data. But in the examples here, only the extremes of the noise distribution

get clipped, and those pixels that get clipped are randomly distributed,

minimizing their perceptual impact. This is fine in my opinion and of no

detrimental consequence to the preceptual image quality.

Conclusions

Image data that appear to be clipped or close to clipping is all a matter

of context. As S/N drops, in low signal regions, the extremes of the noise envelope

might get clipped. This is of no consequence to the visual perception

of the image. If one keeps the majority of the noise envelope, including mean

of the noise distribution above zero, the image can show good contrast at the low end.

What should be avoided is clipping of large areas of an image as a uniform

block. Sometimes even this might be necessary if you need to hide some bad

pattern noise that is common in early digital cameras. No data can at lease look better

than bad data.

If you produce images with this attention to detail, ignore the Clipping Trolls.

Maximizing contrast at the very faintest parts of an image can provide for good

contrast at those low intensities enabling them to be seen better. The side effect

of some random pixels getting clipped is of no real perceptual consequence.

Few cameras can produce this quality of data at the few photon level. The

Canon 7D Mark II 20-megapixel digital camera

is the only Canon camera as of this writing that I have tested that can

perform at this level in long exposures. The key to such performance

is on-sensor dark current suppression, low read noise and low dark current (even though

the magnitude of dark current is suppressed, the noise from the dark current is not). The images here show

uniformity in the background to the electron level, an amazing feat for a camera

working at ambient temperature. See the

Full Review of the Canon 7D Mark II for details.

Nikon Notes. Many Nikon cameras clip the raw data. At some ISOs

on some Nikon cameras, well over half the pixels can be clipped. Yet we don't hear

Nikon users complaining about clipped detail in the shadows of their images.

Indeed, because of the nature of the clipping, producing a random clipping

pattern, the clipping impact on image quality is minimal, and Nikon images

have a good reputation for producing high dynamic range images with great shadow detail.

Clipping is bad for stacking astrophotos to reduce noise. Avoid any processing before

stacking that clips any data. Does this mean Nikons are not good for astrophotography?

Not at all. Simply expose an astrophoto long enough to bring the sky fog to

a level significantly above the read noise (true with any brand camera), and no

data will get clipped.

If you find the information on this site useful,

please support Clarkvision and make a donation (link below).

References and Further Reading

Clarkvision.com Astrophoto Gallery.

Clarkvision.com Nightscapes Gallery.

The Night Photography Series:

http://clarkvision.com/articles/imageprocessing-zeros-are-valid-image-data

First Published April 25, 2015

Last updated April 25, 2015